Generalized search (or catch all, or Swiss army knife) stored procedures are one of the cases where I personally feel that T-SQL does not offer a fantastic solution. Unfortunately, neither does LINQ to SQL nor LINQ to Entities. That said, no tool does a fantastic job with that use case so this is not an indictment of LINQ.

It is true that using LINQ may allow a development team to be more productive, but it is also true that LINQ complicates monitoring and performance tuning. At the end of the day that means that a more skilled (pronounced : expensive) database administration staff may be required.

The Short Version

One big issue with supporting the queries that LINQ generates is that the queries are strange – the almost look like they’ve been through an obfuscator. Another is that there’s not a clean, easy way to add a RECOMPILE or an OPTIMIZE FOR UNKNOWN hint. Sure it’s nice that LINQ doesn’t allow developers to add any crazy, dangerous hint that they want to (*cough* NOLOCK *cough*) but there is a reason that query hints exist. Use of hints should indeed be rare but at times they really are necessary.

The current state of practice in shops that use LINQ (in my experience at least) is for the development team to start out using LINQ, and to re-factor logic into a TSQL-based stored procedure for those cases that LINQ does not handle well. My issue is that those tend to be the really complex cases, and frequently are the cases where we may end up with dynamic SQL inside the stored procedure once we are done performance tuning. As a performance tuning person, that’s exactly the opposite of what I’d like to see. I have no doubt that the average SQL developer can get the easy stuff right. What I would like to see something like LINQ help with is the weird, gnarly, dynamic SQL that goes along with the hardest cases.

Most of us have seen examples of completely insane TSQL that was generated by LINQ. Epic fails are real, I have seen them. I wouldn’t say they’re common though. In my experience what really keeps LINQ from shining is normally that it runs afoul of much more mundane issues such as bad execution plans which arise from the wrong value being sniffed for the wrong parameter at the wrong time. In TSQL when there is a parameter sensitivity issue more often than not a query hint is added, but LINQ makes it difficult to add query hints without really offering an acceptable alterative solution.

The Long Version Part 1: What part of “Project1 Extent1 LEFT JOIN” Don’t You Understand?

I’ll be writing some LINQ code for this post. Don’t worry, I won’t make a habit of it, and I’ll keep things fairly simple for this. To create the database that I use in the examples below, I started with a standard copy of the Adventure Works database. Then I enlarged it using Jonathan Kehayias’ script (read his blog post here here). Yes I am still using Adventure Works for examples. Yes I am old. After creating the database I started a new C# console application in Visual Studio and generated an ADO.Net data model from it.

Now consider the following code

static void Main(string[] args)

{

AdventureWorks2012_bigEntities ctx = new AdventureWorks2012_bigEntities();

var q =

from p in ctx.People

select p.EmailAddresses;

Console.Out.WriteLine(q.ToArray().Length);

}

If we were to write a TSQL query for the same data by hand, it might look like this

SELECT EmailAddress.*

FROM Person.Person

INNER JOIN Person.EmailAddress ON Person.BusinessEntityID = EmailAddress.BusinessEntityID;

No, the it’s not great that we’re using “SELECT *” but that’s not my point, and contrary to popular belief it actually is possible to get LINQ to only bring back a few columns … but that would be another blog post. For this post, I want to compare the preceding query with the following one, which is what LINQ generates in my lab setup.

SELECT [Project1].[BusinessEntityID] AS [BusinessEntityID], [Project1].[C1] AS [C1], [Project1].[BusinessEntityID1] AS [BusinessEntityID1], [Project1].[EmailAddressID] AS [EmailAddressID], [Project1].[EmailAddress] AS [EmailAddress], [Project1].[rowguid] AS [rowguid], [Project1].[ModifiedDate] AS [ModifiedDate] FROM ( SELECT [Extent1].[BusinessEntityID] AS [BusinessEntityID], [Extent2].[BusinessEntityID] AS [BusinessEntityID1], [Extent2].[EmailAddressID] AS [EmailAddressID], [Extent2].[EmailAddress] AS [EmailAddress], [Extent2].[rowguid] AS [rowguid], [Extent2].[ModifiedDate] AS [ModifiedDate], CASE WHEN ([Extent2].[BusinessEntityID] IS NULL) THEN CAST(NULL AS int) ELSE 1 END AS [C1] FROM [Person].[Person] AS [Extent1] LEFT OUTER JOIN [Person].[EmailAddress] AS [Extent2] ON [Extent1].[BusinessEntityID] = [Extent2].[BusinessEntityID] ) AS [Project1] ORDER BY [Project1].[BusinessEntityID] ASC, [Project1].[C1] ASC

No, that’s not super readable as is … but it gets a lot better if we take some time to format the query. Here’s what I came up with after a few minutes of formatting.

SELECT [Project1].[BusinessEntityID] AS [BusinessEntityID],

[Project1].[C1] AS [C1],

[Project1].[BusinessEntityID1] AS [BusinessEntityID1],

[Project1].[EmailAddressID] AS [EmailAddressID],

[Project1].[EmailAddress] AS [EmailAddress],

[Project1].[rowguid] AS [rowguid],

[Project1].[ModifiedDate] AS [ModifiedDate]

FROM (

SELECT [Extent1].[BusinessEntityID] AS [BusinessEntityID],

[Extent2].[BusinessEntityID] AS [BusinessEntityID1],

[Extent2].[EmailAddressID] AS [EmailAddressID],

[Extent2].[EmailAddress] AS [EmailAddress],

[Extent2].[rowguid] AS [rowguid],

[Extent2].[ModifiedDate] AS [ModifiedDate],

CASE WHEN ([Extent2].[BusinessEntityID] IS NULL) THEN CAST(NULL AS int) ELSE 1 END AS [C1]

FROM [Person].[Person] AS [Extent1]

LEFT OUTER JOIN [Person].[EmailAddress] AS [Extent2] ON [Extent1].[BusinessEntityID] = [Extent2].[BusinessEntityID]

) AS [Project1]

ORDER BY [Project1].[BusinessEntityID] ASC, [Project1].[C1] ASC

One minor point that I won’t dwell on, because it’s kind of nit-picky and probably depends on which version of Entity Framework (EF) is used, is that there is an ORDER BY clause on the query even though I didn’t specify any ordering in my LINQ query. SQL Server licenses are expensive – personally I would prefer that those CPU cycles not be wasted when I could sort the data in my C#. Odds are the application is running on a processor that does not cost as much to license. But again, small point and not my main point.

My main point for this section is this – if you’re not a DBA try to put yourself in your DBA’s shoes. Which query makes more sense to you, the one I wrote in TSQL or the one that was generated by LINQ? If you are looking for a performance issue, which one would you rather try to wrap your head around to make a performance recommendation? And this is a relatively straightforward example. Realistic examples of entity framework code produce much longer and much more confusing queries.

Most EF gurus that I’ve talked to say the answer is simple – don’t look at the query, focus on the execution plan. If it’s a system that I’m supporting then fair enough, I personally am pretty comfortable with that. But I have been working with SQL Server for a very long time, and I am very comfortable reading execution plans. Not everyone is as comfortable with them. That means that workloads which involve LINQ are going to tend to skew towards the more experienced end of the performance DBA spectrum for support. In a smaller shop where one of the developers has been “nominated” as the DBA, or which is getting by with a junior DBA, it’s possible that there just simply won’t be the expertise on staff to work through complicated plans. Even at places which do have an experienced performance person on staff, they’re usually pretty busy already and not looking for more work to do.

One important clarification before I move on – I’m not actually saying that one should not use LINQ because it generates ugly SQL. My point is more that there is a trade-off to keep in mind. I think it’s pretty much inevitable that LINQ to Entities will turn up in most organizations because it does code faster than, say, ADO.Net. My point is that there is a cost – both the amount and the complexity of DBA work increases. In all but the smallest organizations I’d suggest planning to bring on a qualified performance DBA before adopting LINQ which is going to consume some of the cost savings from the development efficiencies.

Come On, EF, Take a Hint Already

So ugly queries are unfortunate, and yes they generate some extra work when they need to be tuned. But we’re all professionals here, that’s not going to be a deal breaker for me. Personally my issue is actually that it is very difficult to solve certain performance issues in LINQ. Consider the following code

class Program

{

static IQueryable<SalesOrderDetailEnlarged> DemoQuery( int prodID )

{

AdventureWorks2012_bigEntities ctx = new AdventureWorks2012_bigEntities();

var q1 =

from d in ctx.SalesOrderDetailEnlargeds

where d.ProductID == prodID

select d;

return q1;

}

static void Main(string[] args)

{

int n1 = DemoQuery(42).ToArray().Length; // ToArray to make really really extra sure query runs since we're throwing away results

Console.Out.WriteLine(n1.ToString() + " rows");

}

}

Which gives me this output

0 rows

Obviously somebody is not a Douglas Adams fan. But we are not here to talk about literature, we’re here to talk performance. This is a fairly small DB that fits in memory so there isn’t much point in studying runtimes. Let’s look at I/O. Running this SQL query on the database server

USE master

GO

SELECT qs.last_logical_reads, st.text, qp.query_plan

FROM sys.dm_exec_query_stats qs

CROSS APPLY sys.dm_exec_sql_text(qs.sql_handle) st

CROSS APPLY sys.dm_exec_query_plan(qs.plan_handle) qp

WHERE st.text LIKE '%extent1%' -- Quick and dirty check for a LINQ query

AND qp.dbid = DB_ID('AdventureWorks2012_big') -- Check DB ID to prevent past queries against plan cache from matching;

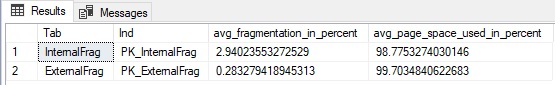

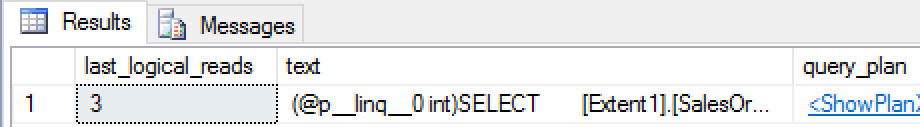

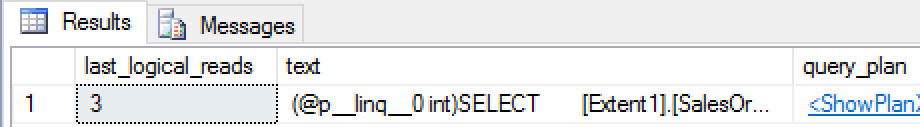

I see this

which by itself isn’t very exciting. But now let’s run that same C# code again with a different product. Below I am only showing the lines that changed (updated 42 to 870).

int n1 = DemoQuery(870).ToArray().Length; // ToArray to make really really extra sure query runs since we're throwing away results

Console.Out.WriteLine(n1.ToString() + " rows");

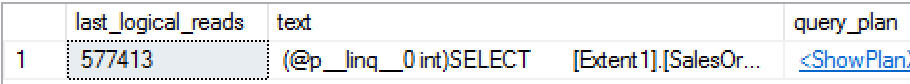

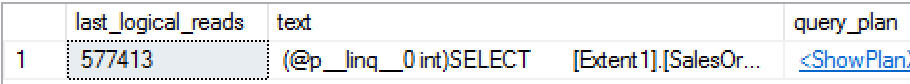

We see a lot more rows and a lot more IO

192208 rows

Assuming each read looks at a page and that we are using 8KB pages, that means that this query is sifting through 4,619,304 KB of data. But if we check the size of the table (e.g. using sp_spaceused), the table only contains 601,472 KB worth of data. To process this query we just read more than 7.5 times as much data as we would have with by just reading the entire table in one shot.

What’s happening here? Let’s look at those two columns that I’ve been ignoring from the SQL query above (text and query_plan). The contain the query and the execution plan (I did a little formatting for clarity).

SELECT

[Extent1].[SalesOrderID] AS [SalesOrderID],

[Extent1].[SalesOrderDetailID] AS [SalesOrderDetailID],

[Extent1].[CarrierTrackingNumber] AS [CarrierTrackingNumber],

[Extent1].[OrderQty] AS [OrderQty],

[Extent1].[ProductID] AS [ProductID],

[Extent1].[SpecialOfferID] AS [SpecialOfferID],

[Extent1].[UnitPrice] AS [UnitPrice],

[Extent1].[UnitPriceDiscount] AS [UnitPriceDiscount],

[Extent1].[LineTotal] AS [LineTotal],

[Extent1].[rowguid] AS [rowguid],

[Extent1].[ModifiedDate] AS [ModifiedDate]

FROM [Sales].[SalesOrderDetailEnlarged] AS [Extent1]

WHERE [Extent1].[ProductID] = @p__linq__0

Here is what I noticed when I look at these. First that is a parameterized query. On the whole that’s probably a good thing. When parameterized SQL is used, by default SQL Server will reuse the execution plan from the first call to the query, which on the whole is also a good thing. But in this specific case, the first call to this query didn’t return any data, so it generated an execution plan that doesn’t work well when a large amount of data comes back. The plan is using an index seek to locate records first and then as a second step retrieving data from the table with a key lookup operator. That’s a fantastic plan for cases where we only expect a few rows, but when a large amount of data is scattered through the table, this plan tends to hit each page multiple times.

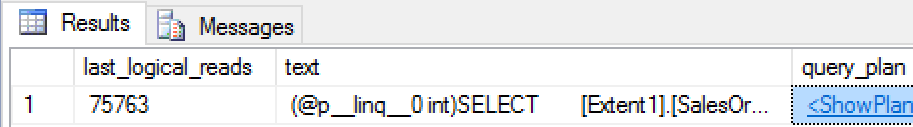

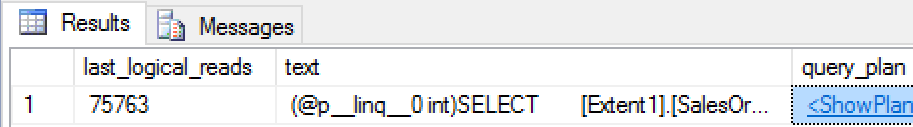

For comparison, I ran DBCC FREEPROCCACHE to clear the plan cache (don’t do this in prod) and then re-ran my C# code which is querying for product ID 870. We now see a much more reasonable amount of IO, and an execution plan which simply does a single scan. This is a fantastic plan for product ID 870 even if it isn’t ideal for product ID 42, and it is not as bad for product 42 s the first plan was for 870.

This is of course a pretty straightforward case of parameter sensitivity. In this particular case, parameter sniffing is hurting us rather than helping. Parameter sniffing is a SQL Server behavior, not a LINQ behavior, so why and I discussing it here? I have devoted this much time to it because this kind of issue is typically fixed by adding either a RECOMPILE or and OPTIMIZE FOR hint, and EF (as well and LINQ to SQL before it) doesn’t have a great way to add query hints. This is already a long post so I won’t dive into these hints in depth, but briefly the RECOMPILE hint prevents SQL Server from caching the plan (no plan reuse, fresh compile every time) while the OPTIMIZE FOR hint allows for plan reuse but changes which parameter value is used for optimization during compilation.

Surviving LINQ

So the problem stands – LINQ is part of our landscape, the issue discussed above probably isn’t going away anytime soon, and we still have to administer our servers. There are a few ways to do this.

Plan Guides

I personally get the most mileage out of plan guides. Plan guides are horrible. And they suck. And they are bad. Did I mention that they suck? At the end of the day, however much I may hate using plan guides in general it does work for this problem. Plan guides change the way that a query is optimized. One of the things that we can do with them is to instruct the optimizer to inject a query hint every time it sees a given query. Here is what I typically do personally when I m trying to fix a problematic LINQ workload.

- Watch the plan cache for queries that have bad plans (and also appear to be from LINQ. It’s obvious which ones are from LINQ, trust me). Discussion on how to do this is out of scope for this post.

- Find the handle of the bad plan, e.g. from dm_exec_query_stats

- Call sp_create_plan_guide_from_handle to create a plan. At this point we have pinned the bad plan into the cache. Do not stop here.

- In Management Studio, locate the new plan guide, right click on it, and generate a DROP and CREATE script for it.

- In the create part of the resulting script, delete the hard-coded plan (it’s a big ugly blob of XML)

- Add the hint you’d like to the @hints parameter( probably OPTIMIZE FOR if there is a parameter value that yields a “good enough” plan for all values, and probably RECOMPILE if this isn’t the case)

- Run the script to re-create the guide.

Query Store

Query store is a new feature in SQL Server 2016. This should be a much easier way of getting an effect similar to what I describe for plan guides above, assuming that the query is recompiled often enough that a “good” plan can be found in the store. As long as there is a single plan that always works well enough, simply pin that execution plan using the GUI. The main reasons that I do not list this first are that I haven’t personally done this yet (haven’t done a MSSQL 2016 project that also uses LINQ yet), and that there are, at the time of this writing, still relatively few places that have 2016 running in production.

Stored Procedures

A more traditional way of coping with LINQ strangeness in general is to recode the problematic query in TSQL as a stored procedure. This is an excellent approach for some cases, but frequently pushing SELECT statements down into a stored procedure is a problem if there is any amount of polymorphism. Is it possible to list all of the queries that the code may issue? Do most of the queries always join to the same set of tables regardless of parameter values? Is the column list that gets returned always the same? If the answer to all of these questions is yes, then it won’t be too painful to wrap a lot of the SELECT logic into stored procedeures. But at the same time, if there is that little polymorphism is there really much benefit to using LINQ?

Entity Framework Interceptors

In the Entity Framework, at least in recent versions, it’s possible for the developers to modify how TSQL code gets generated by using an interceptor. In my mind at least, interceptors have a lot in common with plan guides. They allow us to inject query hints, and they are kind of icky. Personally if I’m the performance DBA for project I’d prefer using a plan guide because it usually requires less coordination. I am likely to be the one that decides to fix a problem query using a hint, and if my only two options are plan guides and interceptors then at least the plan guide can be added without coordinating a code change with the developers. Besides, I don’t want anybody getting the idea that they could write an interceptor that liters the code with NOLOCK hints, and it’s possible this could happen if I call attention to that particular EF feature.

Priming the Cache

I mention this mostly to try to convince the reader not to ever try it. This will not work in the long run. As we saw earlier, it may be possible to avoid parameter sensitivity issues by just ensuring that the first execution of each query has reasonable parameter values. Why not just run a controlled, hand-tuned workload to load good plans into the cache?

The most immediate problem is that plans are added to and ejected from the cache all the time. Does your data change? If so then so do your stats, and after a relevant statistic changes then the query will be recompiled. Is your workload really predicable enough to do this? If not, it’s possible one of the plans that you “seeded” can fall out of the cache if it isn’t used at certain time of day and … again … cause a new compilation next time it is executed. Most importantly, does anybody on the team have the cycles to maintain a priming script as the development team changes the behavior of the product over time?

And of course, this there is the assumption that there is one single execution plan that is reasonable for all parameter values. That’s not always the case.

What Would Make Me Happy

In conclusion, I should probably try to be constructive and discuss possible solutions. Not that I have the answers. If I knew how to make something better than LINQ I would not be a nearly anonymous performance tuning professional that writes overly long blog posts. I would be a famous language architect that is to busy living the good life to be bothered writing.

One thing that I would not change – I’m not sure I’d add an easy way for developers to add any hint that they’d like to. Yes all query hints do exist for a reason and we do need a way to use them, but most hints really are not needed that often. For the one occasion every year that I decide I need to us a FORCESEEK hint I don’t think I’d necessarily mind using a plan guide. Much. It’s a pain, but I am happy to live with a little discomfort if it means that I don’t really have to worry about NOLOCK hints getting overused anymore.

I guess what I’d personally like to see would be extension methods for the hints that are actually needed relatively often in practice. For example wouldn’t it be cool if we could do this

var q1 =

(from d in ctx.SalesOrderDetailEnlargeds

where d.ProductID == prodID

select d).OptimizeForUnknown();

or if OptimizeForUnknown is too MSSQL-centric maybe

var q1 =

(from d in ctx.SalesOrderDetailEnlargeds

where d.ProductID == prodID

select d).SupressParameterSniffing();